Prometheus访问监控对象metrics连接被拒绝¶

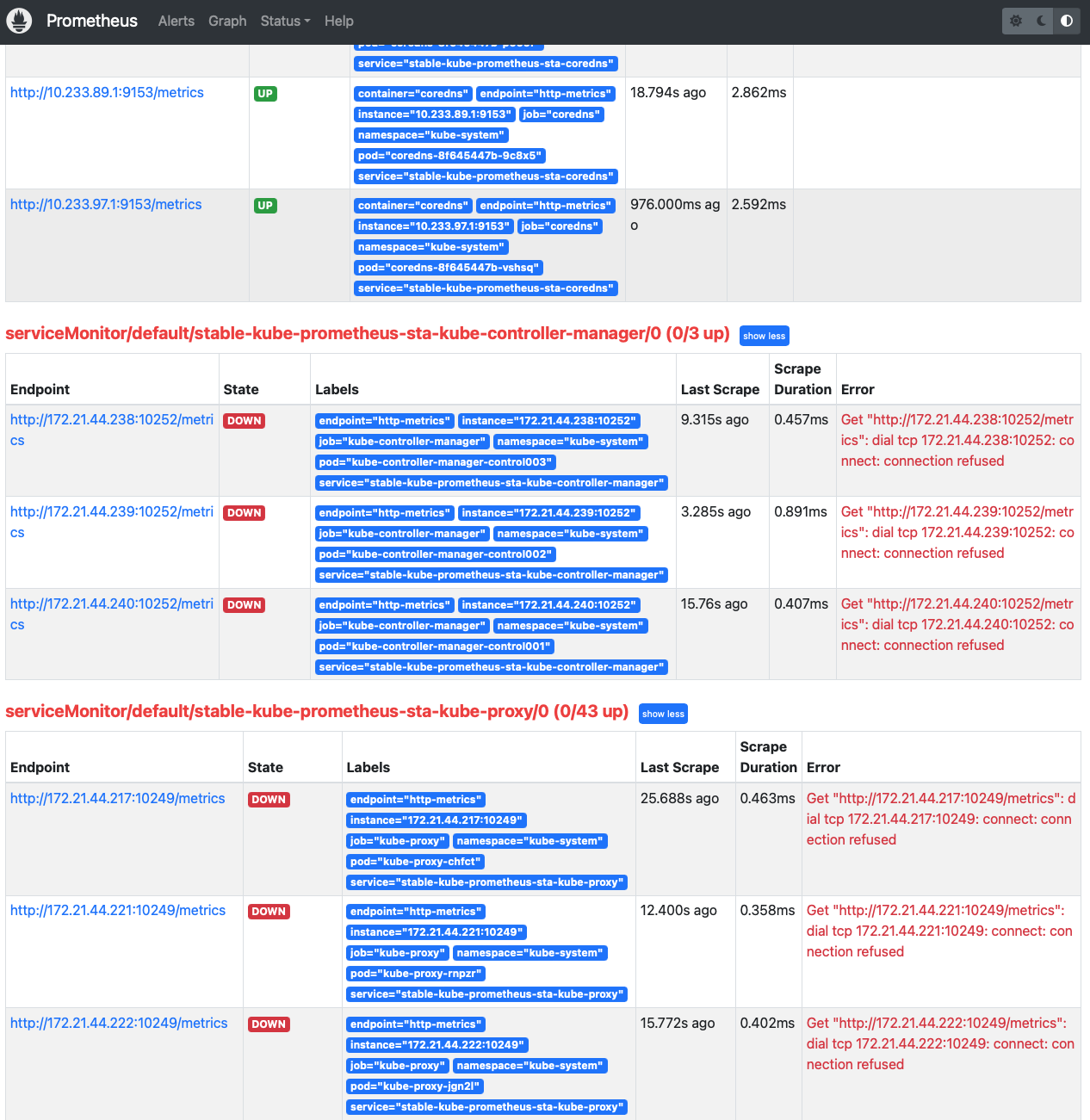

使用Helm 3在Kubernetes集群部署Prometheus和Grafana 后,我发现一个奇怪的现象,在 Prometheus 的 Status >> Targets 中显示的监控对象基本上都是 Down 状态的:

Prometheus 的 Status >> Targets 中显示的监控对象 Down¶

这里访问的对象端口:

端口 |

端口对应监控对象 |

修订 |

|---|---|---|

6443 |

apiserver |

|

8080 |

kube-state-metrics( cAdvisor容器性能分析组件 ) |

|

9090 |

prometheus |

|

9093 |

alertmanager |

|

9100 |

node-exporter |

|

9153 |

coredns |

|

10249 |

kube-proxy |

|

10250 |

kubelet |

Prometheus监控Kubelet, kube-controller-manager 和 kube-scheduler |

10251 |

scheduler |

Prometheus监控Kubelet, kube-controller-manager 和 kube-scheduler |

10252 |

controller-manager |

Prometheus监控Kubelet, kube-controller-manager 和 kube-scheduler |

这里不能访问的监控对象 metrics 通常是因为组件启用了安全配置,也就是 metrics 仅在本地回环地址上提供。例如 kube-proxy 的 metric-bind-address 配置成 127.0.0.1:10249

kube-proxy 暴露10249端口¶

解决方法举例 kube-proxy

$ kubectl edit cm/kube-proxy -n kube-system

## Change from

metricsBindAddress: 127.0.0.1:10249 ### <--- Too secure

## Change to

metricsBindAddress: 0.0.0.0:10249

$ kubectl delete pod -l k8s-app=kube-proxy -n kube-system

注意,删除 kube-proxy 会导致网络短暂断开,所以要迁移容器或者在业务低峰时更新

scheduler 暴露10251端口¶

scheduler 监控采集:

Get "http://172.21.44.238:10251/metrics": dial tcp 172.21.44.238:10251: connect: connection refused

这个问题参考 Kubernetes 监控平面组件 scheduler controller-manager proxy kubelet etcd 有很好的总结。此外 Kubectl get componentstatus fails for scheduler and controller-manager 提供了一个很好的思路,就是首先检查 componentstatus (或者缩写成 cs ) ; 此外检查 kube-apiserver 也有帮助:

检查

componentstatus(cs):

kubectl get cs ( componentstatus )可以获得监控异常原因¶kubectl get cs

输出显示:

kubectl get cs 输出¶ME STATUS MESSAGE ERROR

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

可以看到这个K8s集群的 controller-manager 和 scheduler 的健康状态都无法获得( connection refused )

对于 Kubernetes 高版本,例如,我遇到的项目采用了 1.18.10 版本,默认组件配置 apiserver 允许 metrics ,但是 controller-manager 和 scheduler 则关闭 metrics 。这个配置在管控服务器的 /etc/kubernetes/manifests 目录下有如下配置文件:

kube-apiserver.yaml

kube-controller-manager.yaml

kube-scheduler.yaml

这3个文件决定了管控三驾马车的运行特性:

kube-scheduler的HTTP访问--port int默认值是10251。这个配置如果是0则根本不提供HTTP:

kube-scheduler 配置 --port=0 运行参数则会关闭HTTP访问,也就是无法获取默认的metrics¶apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=0.0.0.0

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

- --port=0

image: lank8s.cn/kube-scheduler:v1.18.10

imagePullPolicy: IfNotPresent

...

但是,我直接在管控服务器上修改 /etc/kubernetes/manifests/kube-scheduler.yaml

...

- --port=10251

...

但是重建 kube-scheduler 这个pods之后,发现运行参数还是 --port=0 。也无法直接修改 kubectl -n kube-system edit pods kube-scheduler-control001 :

kubectl -n kube-system edit pods kube-scheduler-control001 尝试修改配置 --port=10251 但是提示错误¶...

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=0.0.0.0

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

- --port=0

image: lank8s.cn/kube-scheduler:v1.18.10

imagePullPolicy: IfNotPresent

livenessProbe:

...

提示错误:

kubectl -n kube-system edit pods kube-scheduler-control001 尝试修改配置 --port=10251 错误信息返回¶# pods "kube-scheduler-control001" was not valid:

# * spec: Forbidden: pod updates may not change fields other than `spec.containers[*].image`, `spec.initContainers[*].image`, `spec.activeDeadlineSeconds` or `spec.tolerations` (only additions to existing tolerations)

# core.PodSpec{

# Volumes: []core.Volume{{Name: "kubeconfig", VolumeSource: core.VolumeSource{HostPath: &core.HostPathVolumeSource{Path: "/etc/kubernetes/scheduler.conf", Type: &"FileOrCreate"}}}},

# InitContainers: nil,

# Containers: []core.Container{

# {

# Name: "kube-scheduler",

# Image: "lank8s.cn/kube-scheduler:v1.18.10",

# Command: []string{

# ... // 4 identical elements

# "--kubeconfig=/etc/kubernetes/scheduler.conf",

# "--leader-elect=true",

# + "--port=0",

# },

# Args: nil,

# WorkingDir: "",

# ... // 17 identical fields

# },

# },

# EphemeralContainers: nil,

# RestartPolicy: "Always",

# ... // 24 identical fields

# }